OpenAI crushed many speech to text models with their whisper model. It is free and open source which means you can modify it according to your needs.

Google has updated their Speech to text API and reduced the cost as well.

Whisper Code is available on GitHub and OpenAI later launched API as well since hosting Whisper isn’t easy.

Whisper Alternatives (API Models)

There is no other Open-source speech recognition model that is accurate like Whisper however there are APIs available, and some of them are better than Whisper.

1. Deepgram

Deepgram has the fastest Speech to text API. It is even trained on more data so you can expect better accuracy.

Deepgram’s Nova 2 model is also more affordable than Whisper API. It will cost you $0.0043/min for higher usage (Minimum 4k/year) it will cost you $0.0043/min which is more affordable than openai whisper API $0.006.

Deepgram also provides transcription in real-time which can be super useful for meetings. Deepgram offers word-level transcription, it can summarize content, Topic Detection, and Sentiment Analysis.

Deepgram is providing $200 free credits just for testing.

2. Speechmatics

Speechmatics is another top-level speech-to-text API provider. Speechmatics is also one of the more expensive automated speech recognition (ASR) solutions available today.

Speechmatics offers live transcription and live translation in 49 languages. In their free plan, they provide 8 hours of transcription free per month.

Speechmatics is charging an extra $0.65/hour for translation. Speechmatics also provides Summarization, Sentiment Analysis, and Topic Detection similar to Deepgram.

Speechmatics will cost you $0.30/hour which is $0.005/minute, still cheaper than Whiser API.

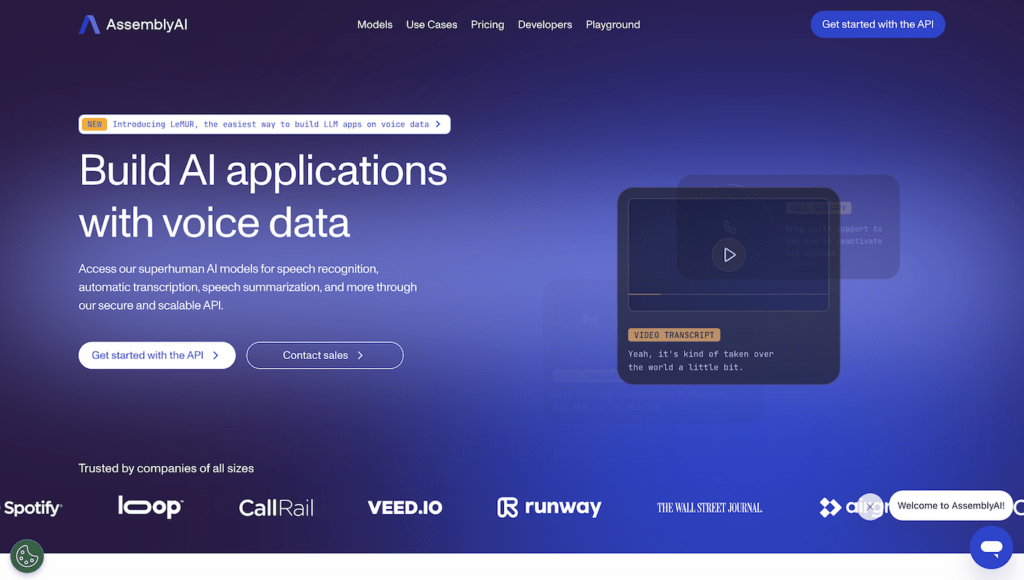

3. Assembly AI

Assembly AI basically fine-tuned Whisper model. It offers both batch & real-time transcription.

Assembly AI offers Filler word filtering, Profanity filtering, and word search. Assembly also offers Audio intelligence. In this, you are getting features like Entity Detection, Content Moderation, Sentiment Analysis, Summarization, Topic Detection, etc.

Assembly AI recently launched their new model of speech recognition Conformer 2 which is trained on 1.1 Million hours of data.

Speechmatics takes Audio Analyzing to the next level with the LeMUR model, in short, it is a large language model.

It can create a custom summary, answer questions, and perform other custom tasks.

4. Google Chirp

After the Whisper Google Speech-to-text API takes the biggest hit. That’s why Google launched the New speech recognition API Google Chirp.

Basically, it is trained on more data and affordable than their previous API. Currently, it is not available for all languages and is still part of beta.

Its accuracy is better than Whisper in some languages however in English results are indistinguishable

Google is using customer data to make the Chirp model even better. You can pay more if you don’t want Google to use your data for training.

Recently I have published Whisper and Google Speech to text API comparison article.

5. Third-Party Whisper API Providers

OpenAI Whisper API offers you quite limited functions. The biggest limitation is file size.

Files shouldn’t be longer than 25MB. There are many Whisper API providers that are offering many more features at lower prices.

For example, Datacrunch is offering Whisper API at $0.0010/minute while OpenAi is offering API at $0.006.

Data crunch file limit is 200 MB and using their API is also pretty easy.

Deepgram which is one of the best alternatives to Whisper also launched its Whisper Cloud API at a lower price than OpenAI.

Deepgram is offering a 2GB file size limit & includes All whisper models. Here is the list of All whisper API providers.

Whisper Alternatives (Open Source )

Her are Open source Speech recognition models in case you don’t want to use API. However, keep in mind that none of them is as accurate as whisper.

Mozilla DeepSpeech:

Mozilla DeepSpeech is an open-source speech-to-text engine based on Baidu’s DeepSpeech architecture. Developers can train DeepSpeech on their own datasets to customize it for unique use cases.

DeepSpeech utilizes TensorFlow and other optimized deep learning components for speech recognition. Key advantages are the ability to deploy for free after training, multi-language support, and integration of language model look-ahead for greater accuracy.

DeepSpeech also leverages beam search for decoding and an optimized runtime written in C++. The project is under active development by Mozilla to expand DeepSpeech’s capabilities. Overall, it’s a flexible open-source speech recognition option.

Facebook wav2vec 2.0

Wav2vec 2.0 is a self-supervised speech recognition model open sourced by Facebook AI Research. It is pre-trained on large unlabeled data which allows fine-tuning wav2vec 2.0 on custom speech datasets.

This makes it adaptable to unique acoustic conditions and vocabularies. Wav2vec 2.0 uses a convolutional neural network to encode raw audio inputs followed by a transformer network.

The unsupervised pre-training step helps wav2vec 2.0 learn robust speech representations. Developers can leverage wav2vec 2.0 as a pre-trained model for transfer learning. As an open-source speech recognition model, wav2vec 2.0 provides advanced capabilities without any licensing costs.

NVIDIA NeMo

NVIDIA NeMo is an open source conversational AI toolkit that includes automatic speech recognition models. It provides pre-built ASR models like QuartzNet and Citrinet that developers can customize through transfer learning on new datasets. NeMo has utilities to handle data preprocessing, model training, optimization and deployment.

The speech recognition models are written in PyTorch and leverage optimizations like JIT compiling. NeMo aims to simplify using the latest speech research like transformer networks.

The project is actively developed by NVIDIA to expand its model catalog. With NeMo, developers get an open source toolkit to build, train and deploy customized speech recognition models.

CoreSpeech

CoreSpeech from CoreWeave is an open source speech recognition engine that is free for workloads under 1 million minutes per month.

It only requires registration to get started. CoreSpeech combines classical speech recognition approaches like HMM with deep learning models like LSTMs.

It was developed specifically for Kubernetes deployments in the cloud. The engine claims high accuracy that can match commercial solutions. CoreSpeech provides language model personalization and acoustic model transfer learning to improve precision on unique use cases.

Though not completely open in pricing, CoreSpeech offers a no-cost entry point for developers to evaluate open source speech recognition.

Final Words

With OpenAI’s revolutionary Whisper shaking up the speech recognition landscape, developers now have an abundance of options to meet various speech-to-text needs.

For those wanting an affordable full-featured API, Deepgram and AssemblyAI stand out for their real-time transcription, audio analysis, and customization capabilities.

Developers seeking a high-quality self-hosted open source solution can explore customizable models like DeepSpeech, wav2vec 2.0, NeMo, and CoreSpeech. And Google’s Chirp API promises to be a top contender in accuracy and value as it develops.

While Whisper’s astonishing accuracy is hard to match, alternatives like these provide meaningful trade-offs across cost, customization, and ease of use.

The innovations driving speech AI forward leave developers in an enviable position to pick the right speech recognition approach for their specific workflow and constraints.

Though Whisper looms large, capable alternatives exist to power speech and audio applications today and into the future.